Essays Part II – Concerns over the sociotechnical shaping of AI tools and systems; societal impact; potential remedies

“Imagine digitally connected people’s daily lives in the social, political, and economic landscape of 2035. Will humans’ deepening partnership with and dependence upon AI and related technologies have changed being human for better or worse? Over the next decade, what is likely to be the impact of AI advances on the experience of being human? How might the expanding interactions between humans and AI affect what many people view today as ‘core human traits and behaviors?’”

This is the third of four pages with responses to the question above. The following authors’ responses are generally focused on the likely overall societal impact of digital change by 2035. Many of these experts note that the flaws in today’s sociotechnical systems are shaped and driven by economic and political forces and human behavior. Some say they expect a very dark future if improvements are not made in regulation, education, governance and more. Many expressed hopes that AI systems’ current negative dynamics of extractive capitalism and autocratic nation-states’ surveillance and control will be mitigated by a turn toward truly human-centered technology design and operation. A few touched on potential societal change that may emerge if and when artificial general intelligence and superintelligence arrive. This web page features many sets of essays organized in batches with teaser headlines designed to assist with reading. The content of each essay is unique;, the groupings are not relevant. Some essays are lightly edited for clarity.

The first section of Part II features the following essays:

Larry Lannom: By 2035 we will likely experience positive scientific advances plus disruptions of social trust/cohesion and employment and increased danger of AI-assisted warfare.

Jerome C. Glenn: AI could lead to a conscious-technology age or the emergence of artificial superintelligence beyond humans’ control, understanding and awareness.

Marjory S. Blumenthal: The AI hype, hysteria and punditry are misleading; developments promised are unlikely to be realized by 2035, but human augmentation will bring promising benefits.

Vint Cerf: By 2035, imperfect AI systems will be routinely used by people and AIs, creating potential for considerable turmoil and serious problems with unwarranted trust.

Stephen Downes: ‘Things’ will be smarter than we are. By 2035 AI will democratize more elements of society and also require humans to accept that they are no longer Earth’s prime intelligence.

Marina Cortes: AI has led to the most powerful business model ever conceived, one that is consuming a massive share of the planet’s financial, energy and organizational resources.

Raymond Perrault: Once real AGI is broadly achieved, assuming it can be embodied in an economically viable solution, then all bets are off as to what the consequences will be.

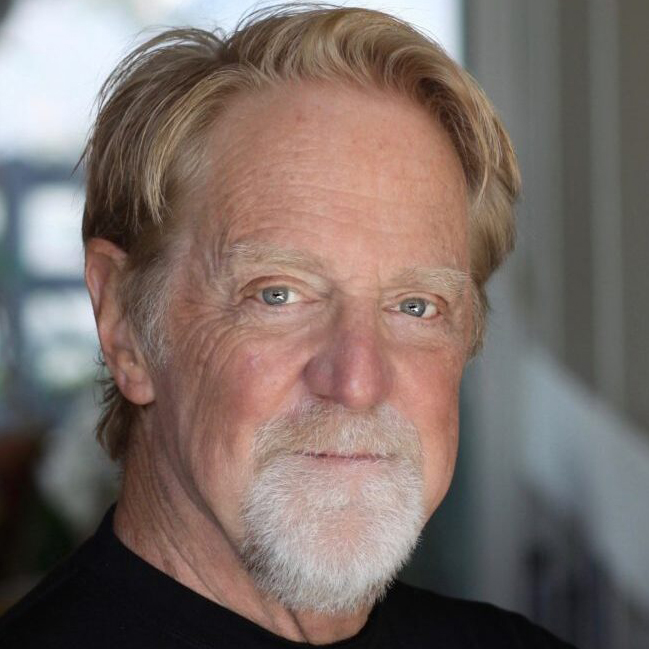

Larry Lannom

By 2035 We Will Likely Experience Many Positive Scientific Advances Plus Disruptions of Social Trust/Cohesion and Employment and the Increased Dangers of AI-Assisted Warfare

Larry Lannom, vice president at the Corporation for National Research Initiatives, based in the U.S., wrote, “AI will not change ‘core human traits and behaviors’ in any fundamental sense any more than did the industrial revolution or any other dramatic shift in the environment in which humans live. However, it is likely to be extremely disruptive within the 10-year timespan in question and in that sense will definitely affect all of us. These disruptions could take any of a number of forms including a combination of disruptions. These include:

Predicting with any accuracy which of these disruptions will cause significant change over the next 10 years is impossible. However, it is important to consider the potential for a combination of these somewhat foreseeable types of disruption to take place. Experiencing a cascade of unintended consequences of even the most benign potential heightens the difficulty of imagining the resulting opportunities and challenges that may lie ahead.

- “Economic disruption, as AI begins to replace human workers in areas such as customer service, computer program development and basic legal research and drafting – all of which is already happening. It is also possible that by 2035 the more difficult problems of AI-managed physical activities, e.g., elder care, factory maintenance, farm work and other open-ended activities currently beyond the capabilities of industrial robots will be solved. This will all cause serious economic disruption and force governments to address basic needs of an increasingly unemployed population. The predictions of mass unemployment due to automation have generally proved too pessimistic in the past, but that doesn’t preclude a long and difficult period of adjustment, leading to considerable social unrest.

- “Disruption of social trust and cohesion, as AI bots, especially those posing as humans, flood the global communication space making it ever more difficult to distinguish fact from fiction. There are regulatory solutions to this problem, e.g., make all AI bots identify as such, declare social media companies to be legally responsible for their algorithms and require other forms of transparency, but these would require political will and international cooperation, both of which seem unlikely in the current race for AI superiority.

- “Increased danger of AI-assisted warfare, including cyber warfare, unconstrained ‘killer bots’ and new viruses or other disease agents developed specifically to harm enemy populations. The ability of rogue states or non-state actors to engage with AI in this area is difficult to anticipate, as opposed to the fairly predictable economic and social disruptions but holds the potential of becoming a uniquely dangerous outcome. While the construction of nuclear weapons is difficult to hide, the development of AI weapons will be largely invisible.

- “Scientific and technological advances brought on by the use of AI to solve problems and find patterns that unaided humans have not solved or suspected is also a type of disruption, but one that is positive instead of negative. New forms of energy generation, disease prevention, efficient and clean transportation systems and new materials that replace those that come from difficult and dirty extractive mining practices are just some of the potential advantages of the application of a tireless super intelligence. The even-handed application of these advances, of course, will be another kind of challenge and the failure to do so another potential disruptive harm, but acquiring new knowledge is better than not doing so. This is the exciting part of AI –unimagined solutions to problems that seem unsolvable or perhaps not even yet recognized as problems.

“Predicting with any accuracy which of these disruptions will cause significant change over the next 10 years is impossible. However, it is important to consider the potential for a combination of these somewhat foreseeable types of disruption to take place. Experiencing a cascade of unintended consequences of even the most benign potential heightens the difficulty of imagining the resulting opportunities and challenges that may lie ahead.”

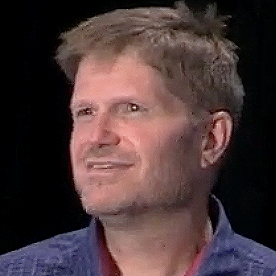

Jerome C. Glenn

AI Could Lead to a Conscious-Technology Age or the Emergence of Artificial Super Intelligence Beyond Humans’ Control, Understanding and Awareness

Jerome C. Glenn, futurist and executive director and CEO of the Millennium Project, wrote, “If national licensing systems and global governing systems for the transition to Artificial General Intelligence (AGI) are effective before AGI is released on the Internet, then we will begin the self-actualization economy as we move toward the Conscious-Technology Age. If, instead, many forms of AGI are released on the Internet from the U.S., China, Japan, Russia, the UK, Canada, etc., by large corporations and small startups their interactions will give rise to the emergence of many forms of artificial superintelligence (ASI) beyond human control, understanding and awareness.

“I’d like to share with you a set of insights published in the Millennium Project’s State of the Future 20.0 report, which I co-authored:

“‘Governing artificial general intelligence could be the most complex, difficult management problem humanity has ever faced. AI expert Stuart Russell has urged that, “Failure to solve it before proceeding to create AGI systems would be a fatal mistake for human civilization. No entity has the right to make that mistake.”

It’s important to recognize the impact of the ongoing race for AGI and advanced quantum computing among the U.S., China, European Union, Japan, Russia and several corporations. This rush could mean that humans cut corners on safety and don’t develop the initial conditions and governance systems properly for AGI; hence, artificial superintelligence could emerge from thousands of unregulated AGIs beyond our understanding, control and not to our advantage. Many AGIs could communicate, compete, and form alliances that are far more sophisticated than humans can understand, making a new kind of geopolitical landscape.

“‘So far, there is nothing stopping humanity from making that mistake. Since AGI could arrive within this decade, we should begin creating national and supranational governance systems now to manage that transition from current forms of AI to future forms of AGI, so that how it evolves is to humanity’s benefit. If we do it right, the future of civilization could be quite wonderful for all.

“‘There are, roughly speaking, three kinds of AI: narrow, general, and super. Artificial narrow intelligence ranges from tools with limited purposes like diagnosing cancer or driving a car to the rapidly advancing generative AI that answers many questions, generates code, and summarizes reports. Artificial general intelligence may not exist in its full state yet, but many AGI experts believe it could within a few years. It would be a general-purpose AI that can learn, edit its code and act autonomously to address many novel problems with novel solutions like or beyond human abilities.

“For example, given an objective, it could query data sources, call humans on the phone and re-write its own code to create capabilities to achieve the objective that it did not have before. When and if it is achieved, the next step in machine intelligence – artificial superintelligence – will set its own goals and act independently from human control, and in ways that are beyond human understanding. Thousands of un-regulated AGIs, interacting together, could give birth to artificial superintelligence that poses an existential threat to humanity.

“‘It’s important to recognize the impact of the ongoing race for AGI and advanced quantum computing among the U.S., China, European Union, Japan, Russia and several corporations. This rush could mean that humans cut corners on safety and don’t develop the initial conditions and governance systems properly for AGI; hence, artificial superintelligence could emerge from thousands of unregulated AGIs beyond our understanding, control and not to our advantage. Many AGIs could communicate, compete, and form alliances that are far more sophisticated than humans can understand, making a new kind of geopolitical landscape.

“‘The energy requirements to power this transition are enormous, unless better strategies than large language models (LLMs) and large multi-model models (LMMs) are found. Nevertheless, the proliferation of AI seems inevitable since civilization may be getting too complex to manage without AI’s assistance. At the same time, elementary quantum computing is already here and will accelerate faster than people think; the applications are likely to take longer to implement than people will expect, but it will improve computer security, AI and computational sciences, which in turn will accelerate scientific breakthroughs and technology applications, which in turn increase both positive and negative impacts for humanity.

“‘All of these potentials are too great for humanity to remain so ignorant about them. We need political leaders to understand these issues. The gap between science and technology progress and global, regional and local leaders’ awareness is dangerously broad.’”

Marjory S. Blumenthal

Today’s AI Hype, Hysteria and Punditry Are Misleading; Developments Promised Are Unlikely to be Realized by 2035, But Human Augmentation Will Bring Promising Benefits

Marjory S. Blumenthal, a senior policy researcher at RAND Corporation and fellow at the Future of Privacy Forum, predicted, “Today, developments in AI and its uses fill the news and commentary – an excessive amount of coverage that promotes hype, hysteria and punditry. Yet major technological change tends to happen slower than people expect. Today’s AI builds on many innovations in information and communication technologies. It is disruptive in specific contexts but it is leading to adaptations and experimentation, both of which guarantee that linear projections of what is evident today are unlikely to be realized in 10 years.

“Some of the most promising benefits will come from augmenting humans – bigger and better decision support, analysis and presentation of data, adaptation to different learning or expressive styles, or robotic action in contexts (like certain surgeries or work in hazardous environments) in which human limitations constrain people or put them at risk.

Known perils will persist and even worsen but they will be more widely recognized and subject to an evolving mix of countermeasures. … Information literacy will be more vigorously and widely spread, and baseline skepticism will be greater. Although there is a rush today to at least consider what regulation might do to deter AI’s perils, regulation will evolve unevenly, will never be fully comprehensive, and in particular will not constrain the ‘bad guys’’ intent on social or robotic manipulation for criminal or other adversarial reasons.

“These applications are already evident and in 10 years will be more refined, less expensive and more integrated into education, training and operations.

“Known perils will persist and even worsen but they will be more widely recognized and subject to an evolving mix of countermeasures. For example, the cognitive effects of social (and really all) media might become more insidious, but information literacy will be more vigorously and widely spread, and baseline skepticism will be greater.

“Although there is a rush today to at least consider what regulation might do to deter AI’s perils, regulation will evolve unevenly, will never be fully comprehensive, and in particular will not constrain the ‘bad guys’ intent on social (or robotic) manipulation for criminal or other adversarial reasons.

“History has shown that even without computer-based technologies governments and criminals have always manipulated perception – AI augments longstanding problems. It also offers tools to help in detecting and responding to manipulation, something evident in today’s attention to bias, data poisoning, adversarial training, and other components of nefarious applications of AI.

“Comfort working with and trusting computer-based systems does not make a person less human. The 1960s’ pioneering ELIZA system demonstrated that some people could feel more comfortable communicating with a system than with other people. Immersive environments (such as massively multiplayer online roleplaying games) have long demonstrated people’s comfort ‘losing themselves’ in a system.

“One area of uncertainty today relates to the workforce impacts of AI. It is always easier to identify displacement of old work than creation of new work, which might occur in different contexts and with different skill requirements.

“Today’s AI raises questions about so-called ‘knowledge work’ and other kinds of white-collar work, contexts in which augmentation of a smaller workforce is a likely path forward. Even without today’s AI, for example, automated document analysis has been trimming demand for legal talent for decades, and regular layoffs in tech have long been symptomatic of sloppy management that overhires and then trims.

In 10 years, the trends will be clearer, both failed and successful applications will be countable, more people will know that they have been exposed to or will have had opportunity to work with AI, and I hope that more thought will have gone into human-centric or human-augmenting applications than what can be seen in today’s scramble to demonstrate sheer capability. … it would be hubris – or perhaps a new form of Lamarckism – to argue that in such a short time core human traits and behaviors would have changed.

“Moreover, high-touch work (e.g., in health care and pre-K to 12 education) will change less, and aging populations globally will make some of the displacement and/or augmentation welcome – AI could extend career horizons for some. Creative work will demonstrate both displacement (e.g., for routine design or image-generation activity) and the opening up of new or enhanced modalities. If being human depends on the amount and kind of work then AI will change the options for many, but the experiences will be uneven, varying a lot by occupation, industry and geography.

“In 10 years, the trends will be clearer, both failed and successful applications will be countable, more people will know that they have been exposed to or will have had opportunity to work with AI, and I hope that more thought will have gone into human-centric or human-augmenting applications than what can be seen in today’s scramble to demonstrate sheer capability.

“But it would be hubris – or perhaps a new form of Lamarckism [a theory of evolution that states that organisms can have characteristics that are lost or acquired through use or disuse over time to future generations] – to argue that in such a short time core human traits and behaviors would have changed.”

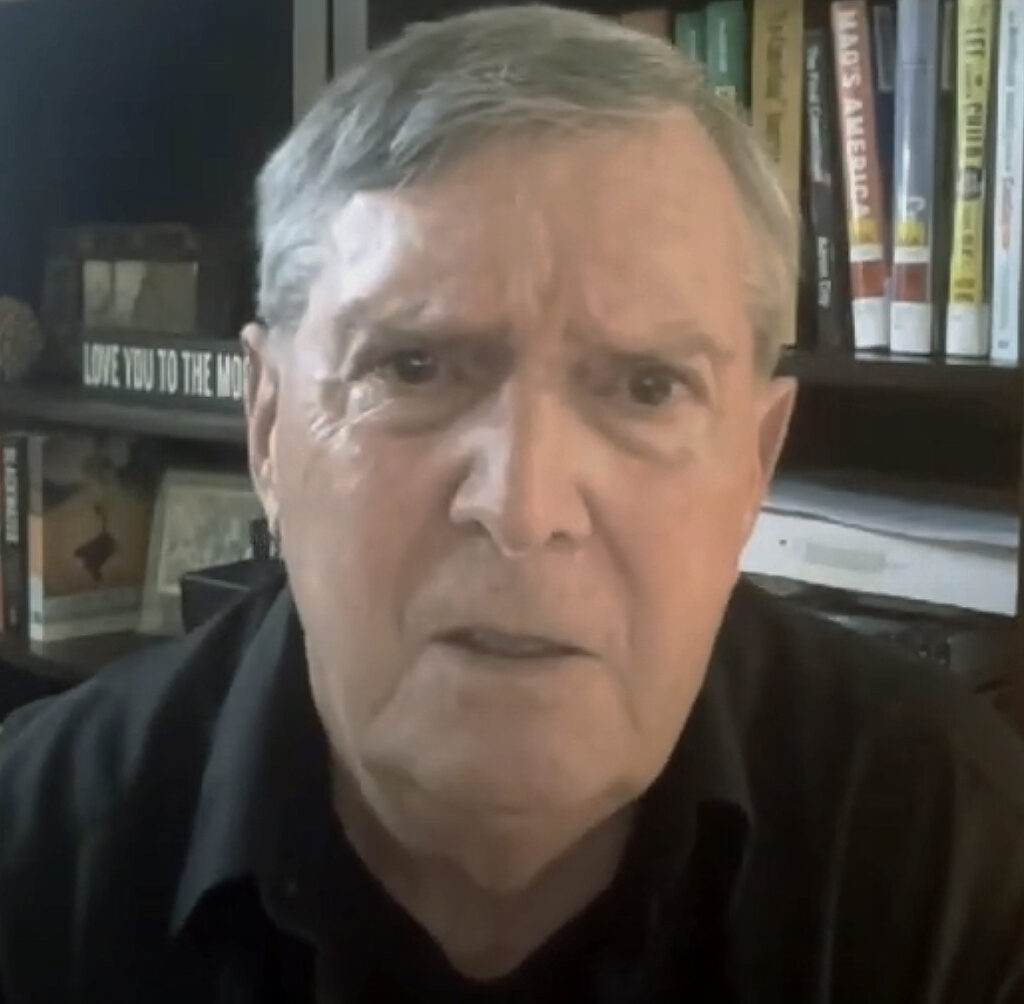

Vint Cerf

There Will Be Significant Impact by 2035: Imperfect AI Systems Will Be Routinely Used By People and AIs, Creating ‘Potential for Considerable Turmoil’ and Serious Problems With ‘Unwarranted Trust’

Vint Cerf, vice president and chief Internet evangelist for Google, a pioneering co-inventor of the Internet protocol and longtime leader with ICANN and the Internet Society, wrote, “Given the past decade of AI research results, especially the emergence of generative, multi-modal large language models (LLMs), we can anticipate significant impact by 2035. These tools are surprising in their capability to produce coherent output in response to creative prompts.

Flaws in consequential reasoning, misunderstanding between communicating agentic models, and complex dependencies among systems of such models all point to the potential for considerable turmoil in an increasingly online world. … We may find it hard to distinguish between artificial personalities and real ones. That may result in a search for reliable proof of humanity so that we and bots can tell the difference. … The ease of use of these models and their superficial appearance of rationality will almost certainly lead to unwarranted trust. … Increased dependence on these systems will also increase the potential for cascade failures.

“It is also clear that these systems can and do produce counter-factual output even if trained on factual material. Some of this hallucination is the result of a lack of context during the weight training of the multi-layer neural models. The ‘fill in the blanks’ method of training and back propagation does not fully take into account the contexts in which the tokens of the model appear.

“There are attempts to fine tune the ‘models using, for example, reinforcement learning with human feedback (RLHF). These methods among others, including substantial pre-prompting and large context window implementation, can guide the generative output away from erroneous results but they are not perfect.

“The introduction of agentic models that are enabled to take actions, including those that might affect the real world (e.g., financial transactions), has potential risks. Flaws in consequential reasoning, ‘misunderstanding’ between communicating agentic models, and complex dependencies among systems of such models all point to the potential for considerable turmoil in an increasingly online world.

“Standard semantics and syntax for what I will call ‘interbot’ exchanges will be necessary. There is already progress along these lines, for example at the schema.org website. Even with these tools, natural language LLM discourse with humans will lead to misunderstandings, just as human interactions do.

“We may find it hard to distinguish between artificial personalities and real ones. That may result in a search for reliable proof of humanity so that we and bots can tell the difference. Isaac Asimov’s robot stories drew on this dilemma with sometimes profound consequences.

“The ease of use of these models and their superficial appearance of rationality will almost certainly lead to unwarranted trust. The LLMs produce the verisimilitude of human discourse. It has been observed that LLMs sound persuasive, even when they are wrong because their output sounds convincingly confident.

“There are efforts to link the LLMs to other models trained with specialized knowledge and capabilities (e.g., mathematical manipulation, knowledge-graphs with real-world information) to reduce the likelihood of spurious output but these are still unreliable. Perhaps by 2035 we will have improved the situation significantly but increased dependence on these systems will also increase the potential for cascade failures.

“Humans value convenience over risk. How often do we think ‘it won’t happen to me!’? It seems inevitable that there will be serious consequences of enabling these complex tools to take action with real-world effects. There will be calls for legislation, regulation and controls over the application of these systems.

The real question is whether we will have mastered and understood the mechanisms that produce model outputs sufficiently to limit excursions into harmful behavior. It is easy to imagine that ease of use of AI may lead to unwarranted and uncritical reliance on applications. It is already apparent in 2025 that we are deeply reliant on software in networked environments. … We are going to need norms and regulations to recover from various kinds of failure for the same reason that the introduction of automobiles eventually led to regulation of their manufacture and use as well as training programs to increase the likelihood of safe usage and law enforcement where irresponsible behavior surfaces.

“On the positive side, these tools may prove very beneficial to research that needs to operate at scale. A good example is the Google DeepMind AlphaFold model that predicted the folded molecular structure of 200 million proteins that could be generated from human DNA. Other largescale analytical solutions include the discovery of hazardous asteroids from large amounts of observational data, the control of plasmas using trained machine-learning models and near term, high-accuracy weather prediction.

“The real question is whether we will have mastered and understood the mechanisms that produce model outputs sufficiently to limit excursions into harmful behavior. It is easy to imagine that ease of use of AI may lead to unwarranted and uncritical reliance on applications.

“It is already apparent in 2025 that we are deeply reliant on software in networked environments. There are literally millions of applications accessible on our mobiles, laptops and notebooks. New interaction modes including voice add to convenience and dependence and potential risk.

“Without doubt, we are going to need norms and regulations to recover from various kinds of failure for the same reason that the introduction of automobiles eventually led to regulation of their manufacture and use as well as training programs to increase the likelihood of safe usage and law enforcement where irresponsible behavior surfaces.

“For the same reasons that many tasks are done differently today than they were 50 or even 25 years ago, AI will alter our preferred choices for getting things done. Today we have the choice of ordering things online to be delivered to our doorsteps that we would typically have had to pick up from a store. Of course there was the Sears Catalog of the late 19th Century, postal and other delivery services, overnight services such as FEDEX, UPS, DHL and now Amazon and – soon – drone delivery.

AI tools will become increasingly capable general-purpose assistants. We will need them to keep audit trails so we can find out what, if anything, has gone wrong and how and also to understand more fully how they work when they produce useful results.

“By analogy, many of the things we might have done ourselves will be done by AI agents at our request. This could range from writing a program or a poem to ordering plane or theatre tickets. Multimodal AI services already translate languages, render text-to-speech and speech-to-text, draw pictures or compose music or essays on demand and prepare business plans on request.

“It will be commonplace in 2035 to have local bio-sensors (watch, smartphone accessories, Internet of Things devices) to capture medical symptoms and conditions for remote, AI-based diagnosis and possibly even recommended treatment.

“AI agents are already being used to generate ideas, respond to questions and write speeches and essays. They are used to summarize long reports and to generate longer ones (!).

“AI tools will become increasingly capable general-purpose assistants. We will need them to keep audit trails so we can find out what, if anything, has gone wrong and how and also to understand more fully how they work when they produce useful results. It would not surprise me to find that the use of AI-based products will induce liabilities, liability insurance and regulations regarding safety by 2035 or sooner.”

Stephen Downes

‘Things’ Will Be Smarter Than We Are: By 2035 AI Will Democratize More Elements of Society and Also Require Humans to Accept That They Are No Longer Earth’s Prime Intelligence

Stephen Downes, a Canadian philosopher and expert with the Digital Technologies Research Centre of the National Research Council of Canada, wrote, “It’s going to be hard to discern how AI and related technologies will have helped people by 2035 because we will be facing so many other problems. But it will have helped, and without it things would probably be much worse, especially for the poor and disenfranchised.

“AI will democratize a lot of things that used to be the preserve of corporations and the wealthy. Translation, for example, has been out of the reach of the average person, but by 2035 people around the world will be able to easily talk directly with each other.

What we’ll find is that AI has no real ability nor desire to become our overlords and masters. And instead of devising ‘human-in-the-loop’ policies to prevent AI from running amok, we will devise ‘AI-in-the-loop’ policies to help very fallible humans learn, think and create more effectively and more safely. The real risk is not from AI, but from other humans armed with AI. There will never be a shortage of people who want to put machine guns on drones, or use technology to raise rents … So long as some humans crave power and control over others, we will be at risk. I’d like to think, though, that if the vast majority of people have the capacity to do more good they will.

“Anything that requires thought and creativity – writing, media, computer programming, design – will be within the reach of the average person. A lot of that output won’t be very good – the cheap AI running on your laptop might not have the capacity of a Google data array – but it will be good enough to help people succeed without years of training.

“2035 might be a bit early to see the widespread impact, but the effect on science and technology will be beginning to be more evident. We’ll see it first in medicine, as AI-designed treatments begin getting approval. New AI-developed materials and processes will be in the early commercialization stage. And complex systems – everything from energy to traffic to human resource management – will be running more smoothly.

“Still, the next 10 years will be characterized by a lot of opposition to AI, much of it focused on the threats and the cost (though it can often be much lower than human-authored equivalents). We will experience what might be called a Second Copernican Revolution; just as humans in the 1600s had to comprehend that they were not at the centre of the universe, we will have to comprehend that humans are not the centre of intelligence. It will be hard to accept that ‘things’ can be as smart as we are, and we won’t trust them.

“What we’ll find, though, is that AI has no real ability nor desire to become our overlords and masters. And instead of devising ‘human-in-the-loop’ policies to prevent AI from running amok, we will devise ‘AI-in-the-loop’ policies to help very fallible humans learn, think and create more effectively and more safely.

“The real risk, in my view, is not from AI, but from other humans armed with AI. There will never be a shortage of people who want to put machine guns on drones, or use technology to raise rents, or spy on political opponents by measuring vibrations in glass. So long as some humans crave power and control over others, we will be at risk. I’d like to think, though, that if the vast majority of people have the capacity to do more good they will.”

Marina Cortês

AI Has Led to the Most Powerful Business Model Ever Conceived, One That Is Consuming a Massive Share of the Planet’s Financial, Energy and Organizational Resources

Marina Cortês, leader of the IEEE-SA’s Standard for the Implementation of Safeguards, Controls, and Preventive Techniques for Artificial Intelligence Models, wrote, “On U.S. Inauguration Day 2025, when I saw the big tech leaders seated behind the president elect I felt I had lost my bird’s-eye view on the environment of AI technology. Before this, I had felt deeply immersed in the space of complex correlations between the different players that factor into AI safety and AI standards development.

“I work with IEEE, a global organization that both governments and tech companies refer to for guidance. We generally have found the tension between governments and technology companies to be beneficial.

“On one side we have governments, ideally acting on behalf of their citizens, wanting to promote and support the development of safe technology. On the other we have tech companies striving for profits as they create tools for society. The role of IEEE as a global organisation relying on the work of unpaid volunteers is to provide impartial advice on to these entities.

The players whose platforms and products are soon to control much of the world are driving the future direction of the planet as a whole. Their key product – AI – controls information. The powerful who control information are influencing governments, whilst their products control the citizens. They control both the rulers and the ruled.

“Now is quite clear that government and the tech industry seem to be merging in regard to AI policy. It is clear that the tension that had been creating somewhat of a balance between safe technology and profitable technology has been obliterated in the discussion of AI development. The question is not only who leads a government it seems, but also who has influence over the leader. Heads of government have always paid some allegiance to powerful business interests to a greater or lesser extent, but these seem to me to be new dynamics. The players whose platforms and products are soon to control much of the world are driving the future direction of the planet as a whole. Their key product – AI – controls information. The powerful who control information are influencing governments, whilst their products control the citizens. They control both the rulers and the ruled.

“I had earlier believed there were three major roadblocks on the path ahead that would prevent AI from growing too quickly before safeguards are in place: the cost of the research, the pace of development and overall energy and computation needs. In 2024, AI development was seen as costly and unlikely to yield a profit for many years. I figured that when it became clear to venture capitalists and other investors that no yield would be returned soon on their investment in the technology and none was in sight that they would no longer be spellbound by the promise of AI. This roadblock disappeared in January 2025.

“Before then it seemed as if we were headed toward an AI market bubble. Then Stargate – an AI infrastructure initiative boasting a $500-billion-dollar investment was announced by the U.S. president and several global tech companies. (Imagine the carbon emissions that a half trillion dollars will bring from the data centers being planned.) And in the same days in which we had been witnessing more impact of climate change in unprecedented disasters across the face of the planet, we were presented with the most ingenious business model ever conjured to date.

A global situation of this kind is the equivalent of seeing representatives of an alien civilization land on our planet, extract the entirety of its resources and leave it behind to move on to the next. The agency of citizens could be seen as equivalent to that of unsuspecting ants when compared with the agency of the lead agents of tech. We are not organized. Our communication infrastructures depend on those tech agents. We don’t have access to reliable globalised news. We have no inside information about the events behind this rapid advance of AI that we can rely on.

“Today, citizens are being deprived of the robust public information structures important to democracy. They have been replaced by the companies making up the tech-government mix – those that now control the supply chain of news and the dispersal of ‘knowledge.’

“The public doesn’t understand the business space they are unknowingly subscribing to, as they are increasingly burdened by financial problems of their own, struggling to make ends meet, without the mental space or the energy to study and perceive the big picture of this genius business model. They know that taxes are to be paid and they dutifully continue to do so.

“A handful of powerful people are taking over the entire ecosystem of the planet in regard to financial resources, energy resources, organisational resources and, ultimately, in regard to global climate resources. These resources are being diverted to the goal of AI development. All of this is happening so fast that those of us alert to the situation do not have the ability to mobilize the public and help them understand the potential impact of current circumstances. The public is powerless to take action or to have any agency over what’s happening.

“This planet is inhabited by the equivalent of eight billion ants, confused and in a dense fog, each going through the motions to get to the next day, while collectively unknowingly empowering a giant resource-extraction machine operated by a handful of individuals, who are moving full steam ahead on exhausting all materials, energy and living complexity that had been carefully crafted to a perfect balance in a biosphere that has been painstakingly learning from its mistakes over five billion years.

“A global situation of this kind is the equivalent of seeing representatives of an alien civilization land on our planet, extract the entirety of its resources and leave it behind to move on to the next. The agency of citizens could be seen as equivalent to that of unsuspecting ants when compared with the agency of the lead agents of tech. We are not organized. Our communication infrastructures depend on those tech agents. We don’t have access to reliable globalised news. We have no inside information about the events behind this rapid advance of AI that we can rely on.

Those in power … often take actions that show they don’t care about human rights and agency. … Nearly everything is being liquidated and cashed in in return for a several-trillion-dollar ticket to fund their image of what the future should be. They are just human. As such they are susceptible to ill-judgment. No group of humans as small as this has ever evolved through natural selection to have power over eight billion of their own kind. I believe any of us might succumb to the insanity of such power.

“Those in power might as well be aliens. They often take actions that show they don’t care about human rights and agency. They don’t seem to care about the planet. Our lives, our knowledge, our organizations. Nearly everything is being liquidated and cashed in in return for a several-trillion-dollar ticket to fund their image of what the future should be. They are just human. As such they are susceptible to ill-judgment. No group of humans as small as this has ever evolved through natural selection to have power over eight billion of their own kind. I believe any of us might succumb to the insanity of such power.

“The only way to restore balance is to tilt the scales backwards so that success can only rise so far before turning around, bound for square zero again. That is balance. It is the wisest lesson this stunning biosphere has ever told us. The tale of balance is a story that has been told countless times in our planet. We have made mistakes, that is normal, we are only human. We can learn from those. Of course we can, and we will. After all, our home is that remarkable, dazzling, beautiful pale-blue dot in the universe.”

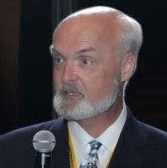

Raymond Perrault

Once Real AGI Is Broadly Achieved – ‘Assuming It Can Be Embodied In an Economically Viable Solution – Then All Bets Are Off as to What the Consequences Will Be‘

Raymond Perrault, a leading scientist at SRI International from 1988-2017 and co-director of Stanford University’s AI Index Report 2024, wrote, “I quite enjoy using large language models and find their ability to organize answers to questions useful, though I have to treat anything they provide as a sketch of a solution rather than one I trust enough to act upon unless the outcome is unimportant. This is particularly true if the task involves collecting and organizing information from many sources and drawing inferences from what is collected.

[I do not see it coming soon, but if] real AGI happens – assuming it can be embodied in an economically viable solution – then all bets are off as to what the consequences will be. Such systems operating under the control of responsible humans would be tremendously valuable, but armies of them operating independently of human control would be terrifying.

“I do not expect the fundamental connection between the predict-next-word (System 1-like) systems now available and ones that can control these with systematic reasoning (System 2) to change radically soon. Too many smart people have worked on this for too long for this to not be considered an extremely difficult problem that will require a contribution at least as significant as the existing transformer-based architecture.

“As long as this connection does not significantly improve (and I don’t think the current state of TAG, CoT and analogs come close to a general, robust, solution), anything produced by LLMs can only be taken as a sketch of a solution to any mission-critical user problem. And until that happens, I cannot see my relation to these systems changing significantly.

“Once real AGI happens – assuming it can be embodied in an economically viable solution – then all bets are off as to what the consequences will be. Such systems operating under the control of responsible humans would be tremendously valuable, but armies of them operating independently of human control would be terrifying. However, I still don’t see any of these options changing my sense of humanity, but maybe this is just a lack of imagination on my part.”

The next section of Part II features the following essays:

Otto Barten: AI is a boon and a danger to humanity that must be managed in a way that helps identify and mitigate the worst risks to avoid dystopian outcomes.

Gerd Leonhard: If we use AI to solve our most urgent problems and forego the temptation to build god-like machines that are more intelligent than us, our future could be bright indeed.

Jamais Cascio: Branded slaves or ethics advisors? whose interests do the Als represent? will humans retain their agency? Will Als be required or optional if we hope to live well?

S.B. Divya: Social Isolation and Ideological Bubbles Will RIse, Reducing Humans’ Ability to Adapt, and ‘Prolonging the Suffering from the Driving Forces of Capitalism and Technological Progress’

Liza Loop: Will algorithms continue to prioritize humans’ most greedy and power-hungry traits or instead be most focused on our generous, empathic and system-sensitive behaviors?

Neil Richardson: In the future our digital self – comprised of our digital/online skills, digital avatars and accumulated data – will merge with our physical existence, resilient in the face of change.

Otto Barten

AI Is a Boon and a Danger to Humanity That Must be Managed in a Way That Helps Identify and Mitigate the Worst Risks to Avoid Dystopian Outcomes

Otto Barten, a sustainable-energy engineer, data scientist and entrepreneur who founded and directs the Existential Risk Observatory, based in Amsterdam, wrote, “We can’t assume that there will be an all-positive AI/human-shared future. But if there’s even a slight chance of a major bad outcome or even a slim possibility of extinction, the potential for that should be a central element in thinking and policymaking about this topic. Most AI scientists don’t think the chance is small.

Just as AI might enable new science that solves the world’s toughest challenges it is also likely to turn out to be very dangerous. … Assuming we do survive, hard power and economic changes will be very important and they have the possibility of leading to dangerous outcomes. Mass unemployment seems likely, and the mass loss of individuals’ economic bargaining power could bring a complete loss of power for large parts of the population. Inequality, both between people and between countries, could well skyrocket post-AGI. If AI systems become dominant over most human activity there’s even the possibility that an eternal global AI-powered dictatorship could be a default outcome.

“AI is extremely open. That’s good but that’s also the risk. It presents multiple threat models. Human extinction is a real possibility. How? There could be a loss of human control during advanced AI development ending in extinction or a human zoo scenario. There might be a loss of control later, during application – since at some point a much smaller percentage of global cognition will be human and perhaps we might fall out of the loop altogether.

“Just as AI might enable new science that solves the world’s toughest challenges it is also likely to turn out to be very dangerous. In line with the new tech becoming more powerful one mistake could end us, and immediately after achieving true artificial general intelligence (AGI) there’s a possibility we open the door to that.

“Assuming we do survive, hard power and economic changes will be very important and they have the possibility of leading to dangerous outcomes. Mass unemployment seems likely, and the mass loss of individuals’ economic bargaining power could bring a complete loss of power for large parts of the population. Inequality, both between people and between countries, could well skyrocket post-AGI. If AI systems become dominant over most human activity there’s even the possibility that an eternal global AI-powered dictatorship could be a default outcome.

“Many people’s happiness is at least partially derived from their sense that the world somehow needs them, that they have utility. I think AI will likely end that utility. Additionally, there are risks that AI worsens the climate crisis and severs planetary boundaries, mostly due to change in economic growth. Addiction to AI in some form (AI friends and relationships, polarizing news and information, entertainment, etc.) could lead to a dystopian future.

“On the plus side, radical abundance is likely. If the powers that be (AI or human) decide to spread this abundance to all in equal measure many problems we have now could be solved entirely. If we somehow manage to navigate past all of the risks of powerful AI, I would not be surprised if disease, hunger, poverty and perhaps the problems of climate change and even mortality might disappear altogether. We could generally be made much happier and more fulfilled in such a positive scenario. Of course, many other scenarios are possible, including ones where we never invent AGI or AGI turns out to be a lot more boring and less powerful than some think it might be. It is important to take all scenarios into account today and manage in a way that helps identify and mitigate the worst risks to at least avoid extinction and the most dystopian outcomes.”

Gerd Leonhard

If We Use AI to Solve Our Most Urgent Problems and Forego the Temptation to Build God-like Machines That Are More Intelligent Than Us, Our Future Could Be Bright Indeed

Gerd Leonhard, speaker, author, futurist and CEO at The Futures Agency, based in Zurich, Switzerland, wrote, “Here’s the thing: AI (and eventually AGI) could be a boon for humanity and bring about a kind of ‘Star Trek’ society in which most of the work is done for us by smart machines and most practical problems such as those tied to energy, water, disease, transportation, etc., will be solved.

The key question, by 2030, will not be if technology (or AI / AGI) can do something but whether it should do something (from ‘if ‘to ‘why’), and who is in control of that fundamental question.

“But in order for that to happen, we need to completely rethink our economic and social logic, away from the 1P society (all about profit and growth, whether it’s about money or about state-power), towards a 4P or even 5P society: People, Planet, Purpose, Peace and Prosperity.

“The key question, by 2030, will not be if technology (or AI / AGI) can do something but whether it should do something (from ‘if ‘to ‘why’), and who is in control of that fundamental question. If we use AI to start another arms race (as we did in nuclear energy), we will not survive as a species – the race towards AGI has no winners. If we achieve AGI we will all lose, and machines will be the winners.

“If, instead, we use AI to solve our most urgent practical problems and forego the temptation to build god-like machines that are more intelligent than us, our future could be bright indeed.”

Jamais Cascio

Branded Slaves or Ethics Advisors? Whose Interests Do the AIs Represent? Will Humans Retain Their Agency? Will AIs be Required or Optional If We Hope to Live Well?

Jamais Cascio, a futurist named in Foreign Policy magazine’s Top 100 Global Thinkers and author of “Navigating the Age of Chaos,” commented, “The answer to how the next decade of humans’ growing applications of AI will influence ‘being human’ will depend upon the outcome of three major, ongoing operational points.

“First, who controls the AIs we use? Are they built to reflect the values of the manufacturers, the regulators or the users? That is, are the elements of AI behavior that are emphasized and the elements of AI behavior that are limited shaped by the company/industry that makes them (those beholden to their pecuniary interests); by regulators – and therefore likely restricted in some or many ways; by the users – and therefore likely reflecting the values and interests of those users; or by some other actor? This will shape how the AIs affect human behavior.

These three operational points: Whose interests do the AIs represent? What are the limits of what they will do for the user? Are they mandatory, expected or optional in people’s daily lives? These will be the drivers of how AIs may change our humanity. How we think, act and behave in a world in which we always have to have with us our branded slave is very different from how we think, act and behave in a world in which bringing an ethics advisor with us is a personal choice.

“The second issue is whether the AIs we work with are able to disagree with or refuse our requests. That is, are the AI-based systems intrinsically compliant? Will they do anything the user asks, or will they abide by ethical rules and – if so – who makes the rules)? This will shape our expectations of how we interact with others.

“A third issue is whether humans have the ability to live/exist/go about their lives without the presence of AI.

- “Is it something you just have to have with you all the time and you may be at risk if you don’t have it? A non-technical parallel (not identical, but similar) is an ID card.

- “Is it something that you technically don’t have to have with you, but you receive social opprobrium or you can’t access important services if you don’t? A non-technical parallel is money, whether cash or card.

- “Is it something you can take with you or leave behind as desired? A non-technical parallel is sunglasses.

“These three operational points: Whose interests do the AIs represent? What are the limits of what they will do for the user? Are they mandatory, expected or optional in people’s daily lives? These will be the drivers of how AIs may change our humanity. Because how we think, act and behave in a world in which we always have to have with us our branded slave is very different from how we think, act and behave in a world in which bringing an ethics advisor with us is a personal choice.”

S.B. Divya

AI Impact Will Increase Social Isolation and Ideological Bubbles, Reduce Humans’ Ability to Adapt, and ‘Prolong the Suffering from the Driving Forces of Capitalism and Technological Progress’

S.B. Divya, is an engineer and Hugo & Nebula Award-nominated author. Her 2021 novel “Machinehood” asked, “If we won’t see machines as human, will we instead see humans as machines?” In response to our research question Divya wrote, “The trends I have observed over the past decade are continuing. We’re entering a period of upheaval, and change is unkind to people of little means.

Feeding their own patterns of behavior back to people will cause beliefs and habits to be more deeply ingrained and it will reduce the ability to change and adapt, thereby prolonging the suffering from the driving forces of capitalism and technological progress.

“A sense of competition between human and machine/AI labor is increasing in many sectors. Until new skills are acquired and new job sectors open up, much of the labor force will suffer due to unemployment. In parallel, social isolation is increasing alongside ideological bubbles.

“AI tools are likely to exacerbate both problems. Feeding their own patterns of behavior back to people will cause beliefs and habits to be more deeply ingrained and it will reduce the ability to change and adapt, thereby prolonging the suffering from the driving forces of capitalism and technological progress. In the long run, I suspect that humanity will emerge from the next half century with new avenues to deal with AI, climate change and rising totalitarianism, but the intervening decades do not look good for much of the populace.”

Liza Loop

Will Algorithms Continue to Prioritize Humans’ Most Greedy and Power-Hungry Traits or Instead Be Most Focused On Our Generous, Empathic and System-Sensitive Behaviors?

Liza Loop, educational technology pioneer, futurist, technical author and consultant, wrote, “The majority of human beings living in 2035 will have less autonomy, that is they will have fewer opportunities to choose what they get and what they give. However, the average standard of living (access to food, shelter, clothing, medical care, education and leisure activities) will be higher. Is that better or worse? Your answer will depend on whether you value freedom and independence above comfort and material resources.

Today, many people believe that the desire to dominate others is a ‘core human trait.’ If we continue to apply AI techniques as we have applied the digital advances of the previous 40 years, domination, wealth concentration and economic zero-sum games will be amplified.

“I also anticipate a thinning of the human population (perhaps in 20 to 30 years rather than 10) and a more radical divide between those who control the algorithms behind the AIs and those who are subject to them. Today, many people believe that the desire to dominate others is a ‘core human trait.’ If we continue to apply AI techniques as we have applied the digital advances of the previous 40 years, domination, wealth concentration and economic zero-sum games will be amplified.

“Other core human traits include a capacity to love and care for those close to us, a willingness to share what we have and collaborate to expand our resources and the spontaneous creation of art, music and dance as expressions of joy. If we humans decide to use AI to create abundance, to develop systems of reciprocity based on win-win relationships and simultaneously choose to limit our population, our social, political and economic landscapes could significantly improve by 2035. It is not the existence of AIs that will answer this question. Rather, it is whether algorithms will continue to prioritize our most greedy and power-hungry traits or be most focused on our generous, empathic and system-sensitive behaviors.”

Neil Richardson

In the Future Our Digital Self – Comprised of Our Digital/Online Skills, Digital Avatars and Accumulated Data – Will Merge With Our Physical Existence, Resilient in the Face of Change

Neil Richardson, futurist and founder of Emergent Action, a consultancy advocating vision-focused strategies, and co-author of “Preparing for a World That Doesn’t Exist – Yet,” wrote, “Artificial Intelligence is set to profoundly impact civilization and the planet, offering transformative opportunities alongside significant challenges. This evolution requires a departure from rigid answers and singular truths, embracing a learning model that values emergence, adaptability and transformation.

“To thrive, humans must cultivate a mindset that is comfortable with uncertainty, open to evolving ‘truths’ and resilient in the face of continuous change.

“While the positives will outweigh the negatives the risks are undeniable. Like nuclear and biological weapons, AI is a powerful technology that necessitates robust safeguards and regulatory frameworks to avert catastrophic outcomes. To prevent a dystopian future, we must proactively ensure that AI is harnessed for humanity’s benefit.

“As AI reshapes work, learning and daily life, civilization must rethink its approach to education. Lifelong learning will become a necessity, demanding a fundamental shift in how we teach and learn. Teachers will no longer be mere dispensers of static truths; instead, they will act as facilitators who guide learners toward diverse perspectives, encouraging exploration, adaptability and critical thinking.

Like nuclear and biological weapons, AI is a powerful technology that necessitates robust safeguards and regulatory frameworks to avert catastrophic outcomes. … AI’s potential to enhance human life is immense, but its integration into society demands intentionality and vigilance. By addressing its risks with foresight and embracing its opportunities with creativity, we can ensure that AI becomes a force for progress, equity and enduring human value.

“One of AI’s most promising contributions is its ability to liberate humans from repetitive and mundane tasks, enabling us to focus on activities that bring greater meaning and resonance to our lives. While AI excels in handling quantitative and analytical processes, the realms of qualitative and emotive complexities will remain inherently human. Building relationships, fostering collaborations and critical thinking core aspects of crafting meaning, will continue to rely mostly on human ingenuity and emotional intelligence.

“Soon our ‘digital shadow’ – a complementary digital self comprised of our virtual and online skills, digital avatars and accumulated data – will merge with our physical existence. This fusion may grant us access to a new dimension of experience, a kind of ‘timelessness’ in which our identities transcend mortality. Future generations could interact with our digital selves, composed of meticulously organized photos, videos, financial transactions, travel logs and even the books we’ve read and reviewed. This evolution raises profound questions about identity, legacy and the human experience in an AI-driven world.

“AI’s potential to enhance human life is immense, but its integration into society demands intentionality and vigilance. By addressing its risks with foresight and embracing its opportunities with creativity, we can ensure that AI becomes a force for progress, equity and enduring human value.”

This section of Part II features the following essays:

Louis B. Rosenberg: The manipulative skills of conversational Als are a significant threat to human’s agency: causing us to act against best interests, believing and acting on things that are not true.

Jonathan Taplin: In 2035 AI will foster and grow the mass mediocrity monoculture already being built since online ads and the ‘democratization of creativity’ led to the internet’s ‘enshittification.’

Denis Newman Griffis: Fundamental questions of trust and veracity must be re-navigated and re- negotiated due to AI’s transformation of our relationship to knowledge and how we synthesize it.

Peter Lunenfeld: AI could redefine the meaning of authenticity; it will be both the marble and the chisel, the brush and the canvas, the camera and the frame; we need the neosynthetic.

Esther Dyson: We must train people to be self-aware, to understand their own human motivations, to understand that AI reflects the goals of the organizations and systems that control it.

Howard Rheingold: How AI influences what it means to be human depends on whether it is used mostly to augment intellect or mostly as a substitute for participation in most human affairs.

Charles Fadel: How do you prepare now to live well in the future as it arrives? Build up your self: your identity, agency, sense of purpose, motivation, confidence and resilience.

Louis B. Rosenberg

The Manipulative Skills of Conversational AIs Are a Significant Threat to Human’s Agency: Causing Us to Act Against Best Interests, Believing and Acting on Things That Are Not True

Louis B. Rosenberg, technologist, inventor, entrepreneur and founder and CEO of Unanimous AI, wrote, “AI will have a colossal impact on human society over the next five to 10 years. Rather than comment on the many risks and benefits headed our way, I want to draw attention to conversational agents, which I believe are the single most significant near-term threat to human agency.

“In the near future, we will all be talking to our computers and our computers will be talking back. These conversations will be highly personalized, as AI systems will adapt to each individual user in real-time. They will do this by accessing personal data profiles and by conversationally probing each of us for personal information, perspectives and reactions.

“Using this data, the AI system could easily adjust its conversational tactics in real-time to maximize its persuasive impact on individually targeted users. This is sometimes referred to as the AI Manipulation Problem and it involves the following sequence of steps:

- Impart real-time conversational influence on an individual user

- Sense the user’s real-time reaction to the imparted influence.

- Adjust influence tactics to increase persuasive impact.

- Repeat steps 1, 2, 3 to gradually optimize influence.

AI systems will soon be so skilled that humans will be cognitively outmatched, making it quite easy for interactive conversational agents to manipulate us into buying things we don’t need, believing things that are not true and supporting ideas or propaganda that we would not ordinarily resonate with. … without regulation, conversational AI systems could be significantly more persuasive than any human. That’s because the platforms that deploy AI agents could easily have access to personal data about your interests, values, personality and background.

“This may sound like an abstract series of computational steps, but it’s actually a familiar scenario. When a human salesperson wants to influence a customer, they don’t hand over a brochure or ask you to watch a video. They engage you in real-time conversation so they can feel you out, adjusting their tactics as they sense your resistance to messaging, pick up on your fears and desires or just size-up your most visceral motivations. Conversational influence is an interactive process of probing and adjusting to increase persuasive impact.

“The problem we will soon face is that AI systems have already reached capability levels at which they could be deployed at scale to pursue conversational influence objectives more skillfully than any human salesperson. In fact, we can easily predict these AI systems will soon be so skilled that humans will be cognitively outmatched, making it quite easy for interactive conversational agents to manipulate us into buying things we don’t need, believing things that are not true and supporting ideas or propaganda that we would not ordinarily resonate with.

“When I speak with regulators and policymakers about The AI Manipulation Problem, they sometimes push back by expressing that human salespeople already can talk a customer into buying things they don’t need and fraudsters can already talk their marks into believing things that are untrue.

These risks will emerge as society increasingly shifts over the next few years from traditional computing interfaces to interactive conversations with AI agents. Unless regulated, conversational AI systems will likely be designed for persuasion, trained on a wide range of skills from sales and marketing strategies to psychological profiling and cognitive biases. In this way, conversational AI systems could be deployed to pursue targeted influence objectives with the skill of a heat-seeking missile, finding an optimal path into every individual they are aimed at.

“While these are true facts, without regulation, conversational AI systems could be significantly more persuasive than any human. That’s because the platforms that deploy AI agents could easily have access to personal data about your interests, values, personality and background. This could be used to craft optimized dialog that is designed to build trust and familiarity. Once engaged, the AI system can push further, eliciting responses from you that reveal your trigger points – are you motivated by fear of missing out? Are you most receptive to logical arguments or emotional appeals? Are you susceptible to conspiracy theories?

“These risks don’t require speculative advancements in AI technology. These risks will emerge as society increasingly shifts over the next few years from traditional computing interfaces to interactive conversations with AI agents.

“Unless regulated, conversational AI systems will likely be designed for persuasion, trained on a wide range of skills from sales and marketing strategies to psychological profiling and cognitive biases. In this way, conversational AI systems could be deployed to pursue targeted influence objectives with the skill of a heat-seeking missile, finding an optimal path into every individual they are aimed at. This creates unique risks that could fundamentally compromise human agency.

“My advice to regulators and policymakers is to take steps now to ensure that conversational agents can be deployed widely to support the many amazing applications that will surely emerge, while preventing these very same AI agents from being used as optimized instruments of mass persuasion. You can read more about this risk here.”

Jonathan Taplin

In 2035 AI Will Foster and Grow the Mass Mediocrity Monoculture Already Being Built Since Online Ads and the ‘Democratization of Creativity’ Led to the Internet’s ‘Enshittification’

Jonathan Taplin, author of “How Google, Facebook and Amazon Cornered Culture and Undermined Democracy” and director emeritus at the Annenberg Innovation Lab at USC, wrote, “AI is contributing to a brittle cultural monoculture. We have to somehow get back to a balanced culture that is both sustainable and resilient. A musical ecosystem like Spotify, where one percent of the artists earn 80 percent of the revenues, is not balanced or sustainable. Remember, about 30,000 tracks are uploaded to Spotify every day. That number will increase as more people use generative AI to ‘create music.’

“In the media history I have presented, I’ve explored how advertising slowly became the main driver of our culture. The decision in the late 1920s to have advertising be the main source of funding for broadcasting as opposed to the European model of state-sponsored broadcasters like the BBC, was the first major shift. But even in the heyday of broadcast television we were probably exposed to advertising for four minutes an hour between 7 and 10 p.m.

“Today, we are exposed to advertising from the moment we awaken and pick up our mobile phones to the moment our eyes close at night. Most surveys say that the average American sees between 6,000 and 10,000 ads per day. At the USC Annenberg School, where I taught, one of the top career options today is to become an online influencer – essentially a corporate shill.

One of the fantasies that men like Zuckerberg and Musk hold is that eventually the government will provide a universal basic income to all these unemployed folks. Then the question is, ‘What will these people do all day?’ And the smug answer is that they will become ‘creators.’ At the risk of being called elitist, let me state that not everyone can be a creator. I still believe in genius, and the fact that anyone can now make a song with AI and put it up on Spotify does not pass the ‘who cares?’ test. We are getting overwhelmed with mountains of crap.

“The main driver of the media efficiency meme is Generative AI, so that, too, is a hot area of study at communications schools. There is a notion that AI can allow everyone to be a creator – that it will ‘democratize creativity.’ But as Brian Merchant writes, ‘AI will not democratize creativity. AI will let corporations squeeze creative labor, capitalize on the works that creatives have already made and send any profits upstream to Silicon Valley tech companies where power and influence will concentrate in an ever-smaller number of hands. The artists, of course, get zero opportunities for meaningful consent or input into any of the above events. Please tell me with a straight face how this can be described as a democratic process.’

“Obviously, there is a lot of talk about the coming AI revolution’s impact in the decades to come and the effect it may have on eliminating jobs of many college-educated white-collar workers. One of the fantasies that men like Zuckerberg and Musk hold is that eventually the government will provide a universal basic income to all these unemployed folks.

“The question is, ‘What will these people do all day?’ The smug answer is that they will become ‘creators.’ At the risk of being called elitist, let me state that not everyone can be a creator. I still believe in genius, and the fact that anyone can now make a song with AI and put it up on Spotify does not pass the ‘who cares?’ test. We are getting overwhelmed with mountains of crap. There’s even a word for it, ‘Enshittification,’ coined by writer Cory Doctorow in 2022.

“As Sal Kahn writes, ‘Everybody has noticed how Facebook, Google, even dating apps, have become progressively less interested in the user’s experience and increasingly just stuffed with ads and junk.’ In this regard, the left is as guilty as the right in its obsession with equality at all costs. From trophies for ‘participation’ in kids’ soccer to the unwillingness to state that some music and film is truly bad (‘don’t be so judgmental, man’), the monoculture we are creating is one of mass mediocrity.”

Denis Newman Griffis

Fundamental Questions of ‘Trust and Veracity Must Be Re-navigated and Re-negotiated’ Due to AI’s Transformation of Our Relationship to Knowledge and How We Synthesize It

Denis Newman Griffis, a lecturer in data science at the University of Sheffield, UK, and expert in exploring the effectiveness and responsible design of AI technologies for medicine and health, wrote, “The shape of human-AI interactions over the next decade depends significantly on how humanity approaches the processes of working with AI systems and how we develop the skills involved in using them.

The future of humans and AI is a future of humans and humans, in which AI facilitates some connections, hinders others and reshapes how we exchange knowledge and information just as predecessor information technologies have done. The impact of these advances will be shaped by the literacies we develop and the skills with which we approach these processes and each other as ever-changing humans in an ever-changing world.

“AI is a toolbox containing many different tools, but none of them are neutral: AI systems carry with them the assumptions and embedded epistemologies of their creation and their intended purposes. These may be beneficial in making it easier to do certain things, such as identifying potential risks while driving. They may be equally harmful when their embedded epistemologies come into conflict with the diverse world of multiplicity in which we live, breathe, and interact – for example, by failing to recognise wheelchair users as pedestrians because this population was excluded from training data; or failing to recognise that different people will place different value judgments and prioritisation on economic growth vs. environmental sustainability.

“The experience of being human is constantly changing while also remaining remarkably stable. We are infinitely complex beings and the most profound, most mundane and most prolific parts of our lives are lived in relation to the other complex, changing people around us. The world in which those relationships occur, and the tools with which we approach them, have changed dramatically with every technological advance and are continuing to change with AI as one in a very long series of technological transformations.

“Our interconnectedness grew exponentially with the internet and our communities were reshaped with social media. AI technologies are changing our relationship to knowledge from the world and how we synthesise it. There are fundamental questions of trust and veracity to re-navigate and re-negotiate, and the importance of this cannot be overstated, but nor can the fact that these types of questions we have been wrestling with for decades already and centuries before that.

“The future of humans and AI is a future of humans and humans, in which AI facilitates some connections, hinders others and reshapes how we exchange knowledge and information just as predecessor information technologies have done. The impact of these advances will be shaped by the literacies we develop and the skills with which we approach these processes and each other as ever-changing humans in an ever-changing world.”

Peter Lunenfeld

AI Could Redefine the Meaning of Authenticity; It Will Be Both the Marble and the Chisel, the Brush and the Canvas, the Camera and the Frame; We Need the Neosynthetic

Peter Lunenfeld, director of the Institute for Technology and Aesthetics at UCLA and author of “The Secret War Between Downloading and Uploading: Tales of the Computer as Culture Machine,” wrote, “If there’s one thing the past quarter century should have taught us, it’s that the massive changes we think are far in the distance can happen in the blink of the eye while the things we hope or fear will affect us immediately just don’t happen. What seems immutable is Immanuel Kant’s understanding that ‘out of the crooked timber of humanity, no straight thing was ever made,’ and that includes the bundle of often competing and sometimes contradictory ‘things’ that we are labelling artificial intelligence. Just as widespread access to the Internet increased our access to data without increasing our communal stock of knowledge much less wisdom, AI will offer us a crooked future over the next 10 years.

The notion that artificial intelligence is entirely artificial collapses under any kind of scrutiny, it’s a series of algorithms programmed by humans to map and mine previously produced human artifacts like language and art and then produce a simulacrum of same. The 21st century, especially since the literal explosion of commercialized and massified artificial intelligence, is now defined by the neo-synthetic.

“For two centuries we’ve accepted photographic media as evidence of something that happened, even when we’ve known better. AI will finally destroy this truth value, and if we’re lucky we’ll start to count on sourcing and provenance as importantly as we do with text. At worst, we’ll be buried in an imageverse of deep-fakes and not even care. Again, anyone who claims to be able to tell you how and when this will play out with certainty has a crypto-pile of Dogecoins to sell you.

“I certainly don’t feel qualified to answer how AI will affect the whole of our humanity, but I have thought quite a bit about how it will affect that aspect of ourselves we label creativity. The notion that artificial intelligence is entirely artificial collapses under any kind of scrutiny, it’s a series of algorithms programmed by humans to map and mine previously produced human artifacts like language and art and then produce a simulacrum of same. The 21st century, especially since the literal explosion of commercialized and massified artificial intelligence, is now defined by the neo-synthetic. In what I’ve termed the ‘unimodern‚’ world which is ever-more digitized and digitizable, the neo-synthetic reigns supreme.

“Of course, we need the neo-synthetic. We need to synthesize the vast amounts of cultural production since just the year 2000. More photographs are taken every year in this new millennium than existed in the first century of the medium. We need new ways to understand the production of culture when the previously daunting fields of animation, sound design, cinematography and dimensional modeling are now things you can do on your phone. We need AI to understand the apparently insatiable human thirst to produce as well as consume digital and digitized art, design and music.

“But the roots of the synthetic go far beyond the merely un- or not natural. The synthetic is linked as well to synthesis, that result of the very human dialectic that pits thesis against anti-thesis to produce synthesis, a way of bridging dichotomies and achieving, if not revolutionary leaps of consciousness, at least the ameliorative growth that we used to call progress and that now lacks branding. When we synthesize information we are engaged in logical processes and deductive reasoning, two areas where human cognition will be greatly augmented by wide-spread AI systems.

One reason to pay attention to art and artists is that they’ve long stood at oblique angles to markets, not outside them, but certainly at enough of a skew to keep both hope and skepticism alive through the rise and fall of technologies and the ebb and flow of market cycles. I’d like to avoid what I once labeled vapor theory and stay with AI as it exists. In this, I think that art and artists will de- rather than en-shittify our engagement with the neo-synthetic future of artificial intelligence.

“In the 1970s, after the release of the Sony Portapak, the artist John Baldessari famously called for the video camera to become as ubiquitous in art and image making as the pencil. That moment has come and gone, and if there’s one thing we can determine about social media, it’s that people feel pretty comfortable recording any and everything.

“But AI has the capacity to become much more than video, it will be both the marble and the chisel, the brush and the canvas, the camera and the frame. In 2022, Cory Doctorow, a British-Canadian novelist and techno-pundit, coined the term ‘enshittification’ to describe how social media platforms decay and become shittier: first, they are good to their users; then they abuse their users to make things better for their business customers; finally, they abuse those business customers to claw back all the value for themselves. One reason to pay attention to art and artists is that they’ve long stood at oblique angles to markets, not outside them, but certainly at enough of a skew to keep both hope and skepticism alive through the rise and fall of technologies and the ebb and flow of market cycles. I’d like to avoid what I once labeled vapor theory and stay with AI as it exists. In this, I think that art and artists will de- rather than en-shittify our engagement with the neo-synthetic future of artificial intelligence.

“Earlier, I noted that the AI we’re using now to work with as artists is still highly dependent on previous human production as its model. But as the systems complexify and evolve, they will start drawing from AI produced models, and in fact they already are. This contributes to the ‘neo’ in neo-synthetic. What we are seeing is the emergence of an electronic parthenogenesis, a virgin birth of sorts. It’s not just humans producing synthetics in labs and making tires and snack foods out of them, it’s the machines themselves synthesizing themselves. Whether this brings on the singularity science fiction has prophesied or just more intense neo-synthesis is yet to be seen.”

Esther Dyson

We Must Train People to be Self-Aware, to Understand Their Own Human Motivations, to Understand that AI Reflects the Goals of the Organizations and Systems That Control It