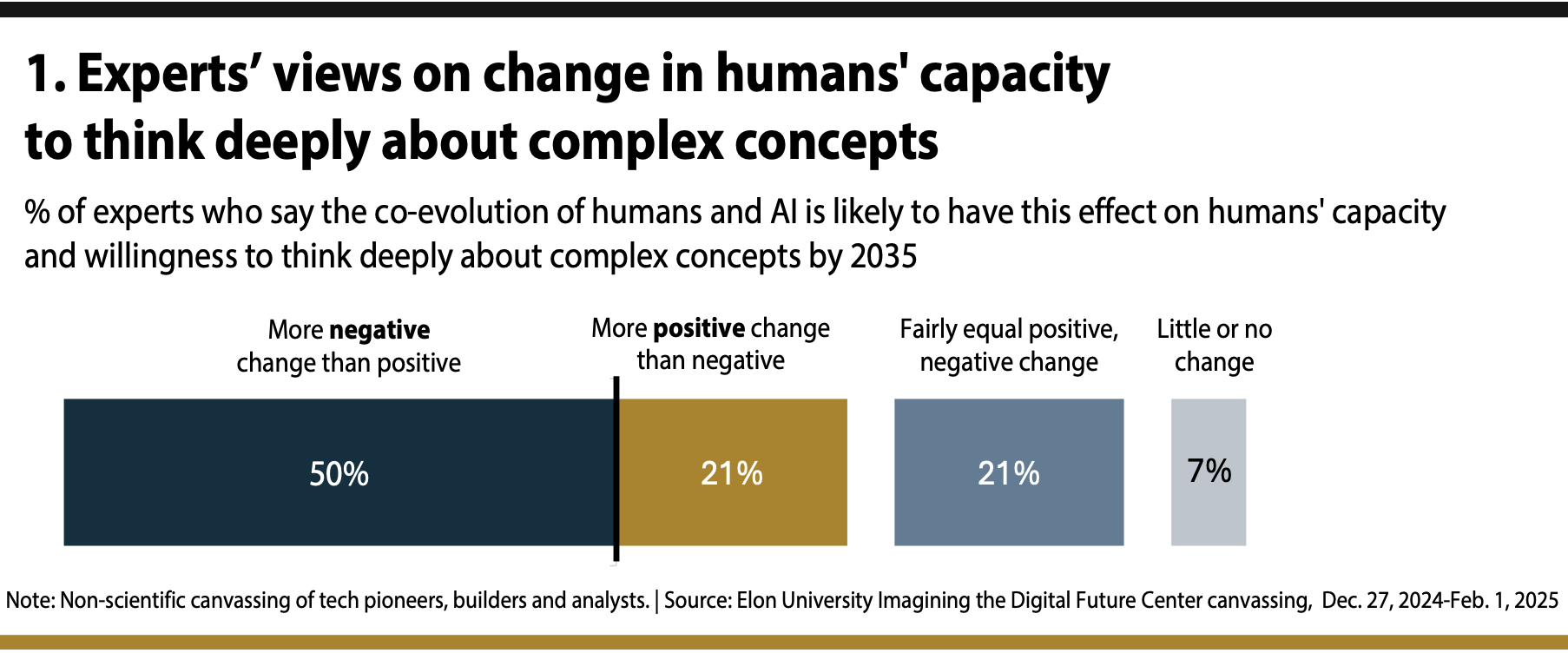

These experts expected in 2025 that by 2035 there will be…

50% – More negative change than positive change

21% – More positive change than negative change

21% – Fairly equal positive and negative change

7% – Little to no change

Many studies have shown that humans’ attention spans and their interest in and capacity for reading deeply and analytically are diminishing in the digital age. This has been attributed at least in part to the public’s voracious consumption of readily available quick hits of information and entertainment – especially on social media platforms and in instant search results. Over the past two decades, people have increasingly prioritized instant gratification over investing time in engaging with complex information. Many experts in this study noted that the ability to be informed enough to actively engage with complex concepts is crucial to the future of human society. Some argued that deep thinking builds phronēsis, the practical, context-sensitive capacity for self-correcting judgment and a resulting practical wisdom unobtainable without hard work. Some fear that by 2035 more people will not apply the focus and find the motivation needed to seek reliable sources in building their foundational knowledge, potentially widening polarization, broadening inequities and diminishing human agency.

A selection of related quotes extracted from these experts’ longer essays:

“By 2035, the impacts will probably be mostly negative when it comes to changes in human abilities. We know from research in psychology that cognitive effort is aversive for most people in most circumstances. The ability of AI systems to perform increasingly powerful reasoning tasks will make it easy for most humans to avoid having to think hard and thus allow that muscle to atrophy even further. I worry that the urge to think critically will continue to dwindle, particularly as it becomes increasingly harder to find critical sources in a world in which much internet content is AI-generated. … Knowledge/expertise is likely to be downgraded as a core human value. A positive vision is that humans will embrace values like empathy and human connection more strongly, but I worry that it will take a different turn in which core humanity focuses more on the human body, with physical feats and violence becoming the new core trait of the species.” – Russell Poldrack, psychologist, neuroscientist and director of the Stanford Center for Reproducible Neuroscience

“The capacity for deep thinking about complex concepts may face particular challenges as AI systems offer increasingly sophisticated outputs that could reduce incentives for independent analysis. This dynamic recalls patterns we’ve observed in our research on community engagement with AI systems, where convenience can inadvertently reduce participatory decision-making. … By 2035, the quality of human-AI interaction will largely depend on the governance frameworks we develop today. … Success will require moving beyond technical capabilities to consider how these systems integrate with and support human social structures.” – Marine Ragnet, affiliate researcher at the New York University Peace Research and Education Program

“The risks and threats of such deskilling have been prominent in ethics and philosophy of technology as well as political philosophy for several decades now. … Our increasing love of and immersion into cultures of entertainment and spectacle distracts us from the hard work of pursuing skills and abilities central to civic/civil discourse and fruitful political engagement. … Should we indeed find ourselves living as the equivalent of medieval serfs in a newly established techno-monarchy, deprived of democratic freedoms and rights and public education that is still oriented toward fostering human autonomy, phronetic judgment and the civic virtues then the next generation will be a generation of no-skilling as far as these and the other essential virtues are concerned.” – Charles Ess, professor emeritus of ethics at the University of Oslo

“While AI augments our capabilities, it may simultaneously weaken our independent competence in basic cognitive functions that historically required active engagement and repetition. … AI will turbocharge the pollution of our information ecosystem with sophisticated tools to create and disseminate misinformation and disinformation. This, in turn, will create deeper echo chambers and societal divisions and fragment shared cultural experiences. As AI becomes more pervasive, a new digital divide will emerge, creating societal hierarchies based on AI fluency. Individuals with greater access to and mastery of AI tools will occupy higher social strata. In contrast, those with limited access to or lower AI literacy will be marginalized, fundamentally reshaping social stratification in the digital age.” – Alexa Raad, longtime technology executive and host of the TechSequences podcast

“AI has the potential to improve the ‘cognitive scaffolding’ of human behavior just as computers, the internet and smartphones have done in the past. It will become easier to find and synthesize information, making our connection to the digital world even deeper than it already is in both professional and personal settings. … Depending on how we develop and apply AI systems, there is both an opportunity for AI to mostly empower human intelligence and creativity by scaffolding their intellectual pursuits, as well as a threat that AI will erode intelligence and creativity by forcing human behavior into following AI-amenable patterns.” – Bart Knijnenberg, professor of human-centered computing, Clemson University

“By 2035, the relationship between humans and AI will likely evolve from today’s tool-based interaction into a complex symbiotic partnership, fundamentally reshaping what it means to be human while preserving core aspects of human identity and agency. This transformation will manifest across three key dimensions: cognitive augmentation, social relationships and institutional structures. … AI will likely develop as a cognitive enhancement layer, creating ‘augmented intelligence’ that supports rather than replaces human judgment. Human feedback in the AI lifecycle is critical here as it ensures that AI systems align with human values and preferences. By iteratively incorporating feedback from diverse users, AI can be trained to enhance human decision-making while respecting individual agency and cultural contexts.” – Wayne Wei Wang, Ph.D. candidate in computational legal studies at the University of Hong Kong and CyberBRICS Fellow at FGV Rio Law School, Brazil