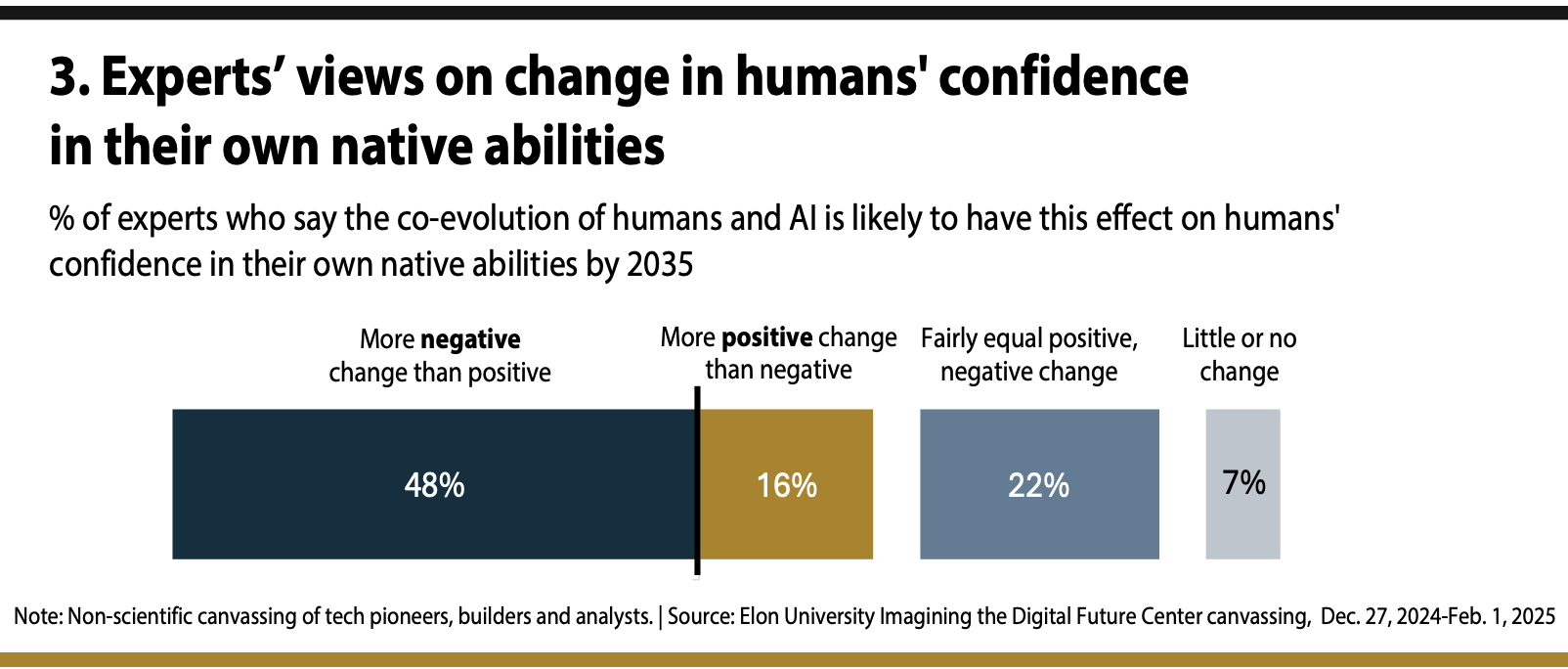

These experts expected in 2025 that by 2035 there will be…

48% – More negative change than positive change

16% – More positive change than negative change

22% – Fairly equal positive and negative change

7% – Little to no change

A notable share of these experts focused on the problems that might arise as humans deepen their dependence on AI systems and agents and begin to see them as more capable of making choices than they truly are. This could lead people to lose confidence in their own judgment, possibly resulting in a loss of faith in themselves and a diminished expectation of the value of human involvement in conflict resolution, the handling of complex situations and retention of lessons learned from past experiences, plus the diminishment of humans’ own capabilities for self-reliance. A few said humans will be able to gain knowledge and have uplifting experiences through AI systems that build their confidence in their native abilities and understanding of the world, just as humans gain such wisdom from other humans.

A selection of related quotes extracted from these experts’ longer essays:

“With AI increasingly embedded in everything from personal decision-making to public services from health to transport and everything in between (the ‘digital public infrastructure‘), humans could become over-reliant on systems we barely understand – and outcomes we have no control over. … This dependence on opaque systems raises existential concerns about autonomy, resilience and what happens when systems fail or are manipulated, and in cases of mistaken identity and punishment in a surveillance society. It undermines authentic human intelligence unmediated by AI.” – Tracey Follows, CEO of Futuremade, a leading UK-based strategic consultancy

“Human competence will atrophy; AIs will clash like gladiators in law, business and politics; religious movements will worship deity avatars; trust will be bought and sold. Because they will be built under market forces, AIs will present themselves as helpful, instrumental, and eventually indispensable. … To play serious roles in life and society, AIs cannot be values-neutral. They will sometimes apparently act cooperatively on our behalf, but at other times, by design, they will act in opposition to people individually and group-wise. AI-brokered demands will not only dominate in any contest with mere humans, but oftentimes, persuade us into submission that they’re right after all.” – Eric Saund, independent research scientist applying cognitive science and AI in conversational agents

“AI romantic partners will provide idealized relationships that make human partnerships seem unnecessarily difficult. The workplace will be transformed as AI systems take over cognitive and creative tasks. This promises efficiency but risks reducing human agency, confidence and capability. Economic participation will be controlled by AI platforms, potentially threatening individual autonomy. … Basic skills in arithmetic, navigation and memory are likely to be diminished through AI dependence. But most concerning is the potential dampening of human drive and ambition. Why strive for difficult achievements when AI can provide simulated success and satisfaction?” – Nell Watson, president of EURAIO, the European Responsible Artificial Intelligence Office and an AI Ethics expert with IEEE

“The education systems are not expert at teaching discernment, and that will be the primary difference, individual to individual, between additive AI and misleading AI. People who think before they speak will still do so, and in a human fashion. Their thoughts may have been expanded by what they’ve seen/heard from AIs, but the end results will still be human. On the other hand, people who accept what others say may take it literally and largely as fact will probably do the same with AIs, and that could end up being a self-reinforcing pattern. Those who unquestioningly accept AI outputs may lose trust in their own reasoning, drifting from reality and weakening their native intelligence.” – Glenn Ricart, founder and CTO of U.S. Ignite, previously served as DARPA‘s liaison to the Clinton White House

“My pessimism regarding what may come by 2035 arises from the recent and likely future developments of AI, machine learning, LLMs, and other (quasi-) autonomous systems. Such systems are fundamentally undermining the opportunities and affordances needed to acquire and practice valued human virtues. This will happen in two ways: first, patterns of deskilling, i.e., the loss of skills, capacities, and virtues essential to human flourishing and robust democratic societies, and then, second, patterns of no-skilling, the elimination of the opportunities and environments required for acquiring such skills and virtues in the first place. We fall in love with the technologies of our own enslavement. …The more we spend time amusing ourselves … the less we pursue the fostering of those capacities and virtues essential to human autonomy, flourishing and civil/democratic societies. Indeed, at the extreme in ‘Brave New World’ we no longer suffer from being unfree because we have simply forgotten – or never learned in the first place – what pursuing human autonomy was about. … The more that we offload these capacities to these systems, the more we thereby undermine our own skills and abilities.” – Charles Ess, professor emeritus of ethics at the University of Oslo

“AI/machine learning tools are better equipped than humans to discover previously hidden aspects of the way the world works. … They ‘see’ things that we cannot. … That is a powerful new way to discover truth. The question is whether these new AI tools of discovery will galvanize humans or demoralize them. Some of the things I think will be in play because of the rise of AI: our understanding of free will, creativity, knowledge, fairness and larger issues of morality, the nature of causality, and, ultimately, reality itself. … I am opting for a very optimistic view that machine learning can reveal things that we have not seen during the millennia we have been looking upwards for eternal universals. I hope they will inspire us to look down for particulars that can be equally, maybe even more, enlightening.” – David Weinberger, senior researcher and fellow at Harvard University‘s Berkman Klein Center for Internet & Society