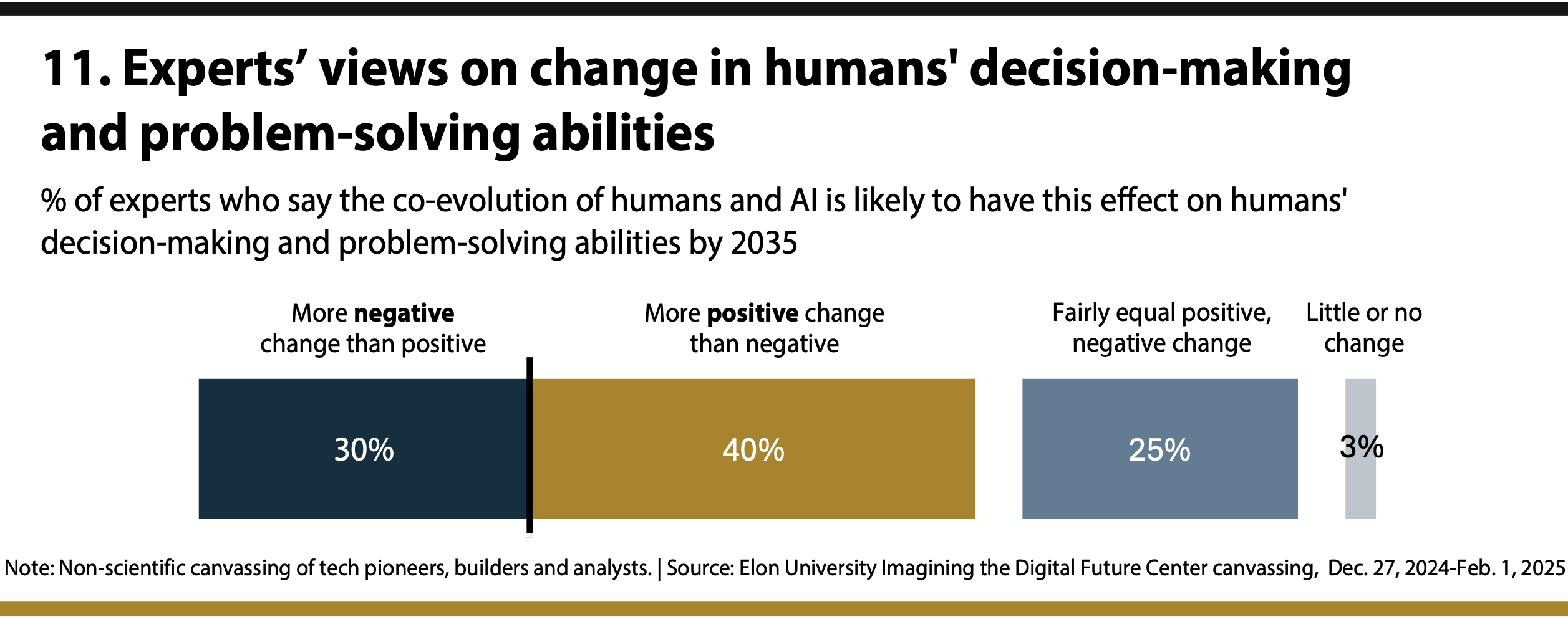

These experts expected in 2025 that by 2035 there will be…

30% – More negative change than positive change

40% – More positive change than negative change

25% – Fairly equal positive and negative change

3% – Little to no change

The experts’ views were more likely to be positive than negative about the influence that humans’ further adoption of AI tools and systems will have on their decision-making and problem-solving skills. A number of them expect that the implementation of AI and the knowledge gained through the use of AI tools will somehow expand humans’ own individual capacities in decision-making and problem-solving. Some predicted that when AI systems tackle low-priority tasks, relieving people of some of their cognitive burden will allow them to shift their attention to more important issues and tasks. Some expect that the knowledge gained through the use of AI tools will allow people to be more insightful about how they make choices when they are operating under the power of their own human capabilities alone. Others worry, however, about the negative implications of humans deferring all of their critical thinking to machine intelligence.

A selection of related quotes extracted from these experts’ longer essays:

“Unlike in today’s monolithic systems driven by profit motives … imagine a world where you can visualize the ripple effects of your actions across generations. You could explore the environmental consequences of your consumption habits, assess how your parenting choices might shape your children’s futures, or even foresee how shifts in your career might contribute to societal progress. These uses of AI would not only enrich individual decision-making but also cultivate within humanity itself a collective sense of responsibility for the broader impact of our choices. At the heart of this vision lies personalized AI tailored to the unique needs and aspirations of each individual.” – Liselotte Lyngsø, founder of Future Navigator, a consultancy in Copenhagen, Denmark

“There is a high degree of probability that we will have built, by 2035, what I call ‘the last human tool’ or artificial general intelligence (AGI). … If humanity is able to stand the waves of change that this advanced intelligence will bring, it could be a bright future. … Education is poised to transform from a system focused primarily on knowledge acquisition to one that values creativity, problem-solving and the cultivation of unique personal skills. The traditional emphasis on knowledge retention could diminish, encouraging humans to focus more on wisdom and interpretation rather than raw data.” – David Vivancos, CEO at MindBigData.com and author of “The End of Knowledge”

“The boundary between human and machine may blur as AI becomes more integrated into human decision-making. AI-driven assistants and advisors could influence our choices, subtly reshaping how we think and act. While this partnership may lead to more efficiencies, it risks diminishing human agency if individuals begin to defer critical thinking to algorithms.” – Laura Montoya, founder and executive director at Accel AI Institute, general partner at Accel Impact Ventures and president of Latinx in AI

“As humans begin to embrace more-advanced AI, society is viewing it as the solver of its problems. It sees AI as the thinker and society as the beneficiary of that thinking. As this continues, the perceived necessity for humans to ‘think’ loses ground as does humans’ belief in the necessity to learn, retain and fully comprehend information. The traditional amount of effort humans invested in the past in building and honing the critical thinking skills required to live day-to-day and solve life and work problems may be perceived as unnecessary now that AI is available to offer solutions, direction and information – in reality and in perception making life much easier. As we are evolutionarily programmed to conserve energy, our tools are aligned to conserving energy and therefore we immerse ourselves in them. We become highly and deeply dependent on them. … We will implement AI to be a sounding board, to take on advocacy on our behalf, to be an active and open listening agent that meets the interaction needs we crave and completes transactions efficiently. We will therefore change and in many ways evolve to the point at which the once-vital necessity to ‘think’ begins to seem less and less important and more difficult to achieve. Our core human traits and our behaviors will change, because we will have changed.” – Kevin Novak, founder and CEO of futures firm 2040 Digital and author of “The Truth About Transformation”

“Because they will be built under market forces, AIs will present as helpful, instrumental and eventually, indispensable. This dependence will allow human competence to atrophy. … AIs cannot be values-neutral. They will sometimes apparently act cooperatively on our behalf, but at other times, by design, they will act in opposition to people individually and group-wise. AI-brokered demands will not only dominate in any contest with mere humans but oftentimes persuade us into submission that they’re right after all. And, as instructed by their individual, corporate and government owners, AI agents will act in opposition to one another as well. Negotiations will be delegated to AI specialists possessing superior knowledge and game-theoretic skills. Humans will struggle to interpret bewildering clashes among AI gladiators in business, law, and international conflict.” – Eric Saund, independent research scientist expert in cognitive architectures

“Without self-motivation to use AI as a learning tool, users merely receive answers from AI (sometimes incorrect ones). Similarly, in areas like creativity, decision-making and problem-solving, AI tends to do it for users rather than encourage the users to practice those skills. People naturally gravitate toward the path of least resistance, turning to AI for immediate solutions rather than working hard on a solution themselves.” – Risto Uuk, European Union research lead for the Future of Life Institute, based in Brussels, Belgium

“Humans value convenience over risk. How often do we think ‘it won’t happen to me!’? It seems inevitable that there will be serious consequences of enabling these complex tools to take action with real-world effects. There will be calls for legislation, regulation and controls over the application of these systems.” – Vint Cerf, vice president and chief Internet evangelist for Google