“Given the past decade of AI research results, especially the emergence of generative, multi-modal large language models (LLMs), we can anticipate significant impact by 2035. These tools are surprising in their capability to produce coherent output in response to creative prompts.

“It is also clear that these systems can and do produce counter-factual output even if trained on factual material. Some of this hallucination is the result of a lack of context during the weight training of the multi-layer neural models. The ‘fill in the blanks’ method of training and back propagation does not fully take into account the contexts in which the tokens of the model appear.

“There are attempts to fine tune the ‘models using, for example, reinforcement learning with human feedback (RLHF). These methods among others, including substantial pre-prompting and large context window implementation, can guide the generative output away from erroneous results but they are not perfect.

Flaws in consequential reasoning, misunderstanding between communicating agentic models, and complex dependencies among systems of such models all point to the potential for considerable turmoil in an increasingly online world. … We may find it hard to distinguish between artificial personalities and real ones. That may result in a search for reliable proof of humanity so that we and bots can tell the difference. … The ease of use of these models and their superficial appearance of rationality will almost certainly lead to unwarranted trust. … Increased dependence on these systems will also increase the potential for cascade failures.

“The introduction of agentic models that are enabled to take actions, including those that might affect the real world (e.g., financial transactions), has potential risks. Flaws in consequential reasoning, ‘misunderstanding’ between communicating agentic models, and complex dependencies among systems of such models all point to the potential for considerable turmoil in an increasingly online world.

“Standard semantics and syntax for what I will call ‘interbot’ exchanges will be necessary. There is already progress along these lines, for example at the schema.org website. Even with these tools, natural language LLM discourse with humans will lead to misunderstandings, just as human interactions do.

“We may find it hard to distinguish between artificial personalities and real ones. That may result in a search for reliable proof of humanity so that we and bots can tell the difference. Isaac Asimov’s robot stories drew on this dilemma with sometimes profound consequences.

“The ease of use of these models and their superficial appearance of rationality will almost certainly lead to unwarranted trust. The LLMs produce the verisimilitude of human discourse. It has been observed that LLMs sound persuasive, even when they are wrong because their output sounds convincingly confident.

“There are efforts to link the LLMs to other models trained with specialized knowledge and capabilities (e.g., mathematical manipulation, knowledge-graphs with real-world information) to reduce the likelihood of spurious output but these are still unreliable. Perhaps by 2035 we will have improved the situation significantly but increased dependence on these systems will also increase the potential for cascade failures.

“Humans value convenience over risk. How often do we think ‘it won’t happen to me!’? It seems inevitable that there will be serious consequences of enabling these complex tools to take action with real-world effects. There will be calls for legislation, regulation and controls over the application of these systems.

The real question is whether we will have mastered and understood the mechanisms that produce model outputs sufficiently to limit excursions into harmful behavior. It is easy to imagine that ease of use of AI may lead to unwarranted and uncritical reliance on applications. It is already apparent in 2025 that we are deeply reliant on software in networked environments. … We are going to need norms and regulations to recover from various kinds of failure for the same reason that the introduction of automobiles eventually led to regulation of their manufacture and use as well as training programs to increase the likelihood of safe usage and law enforcement where irresponsible behavior surfaces.

“On the positive side, these tools may prove very beneficial to research that needs to operate at scale. A good example is the Google DeepMind AlphaFold model that predicted the folded molecular structure of 200 million proteins that could be generated from human DNA. Other largescale analytical solutions include the discovery of hazardous asteroids from large amounts of observational data, the control of plasmas using trained machine-learning models and near term, high-accuracy weather prediction.

“The real question is whether we will have mastered and understood the mechanisms that produce model outputs sufficiently to limit excursions into harmful behavior. It is easy to imagine that ease of use of AI may lead to unwarranted and uncritical reliance on applications.

“It is already apparent in 2025 that we are deeply reliant on software in networked environments. There are literally millions of applications accessible on our mobiles, laptops and notebooks. New interaction modes including voice add to convenience and dependence and potential risk.

“Without doubt, we are going to need norms and regulations to recover from various kinds of failure for the same reason that the introduction of automobiles eventually led to regulation of their manufacture and use as well as training programs to increase the likelihood of safe usage and law enforcement where irresponsible behavior surfaces.

“For the same reasons that many tasks are done differently today than they were 50 or even 25 years ago, AI will alter our preferred choices for getting things done. Today we have the choice of ordering things online to be delivered to our doorsteps that we would typically have had to pick up from a store. Of course there was the Sears Catalog of the late 19th Century, postal and other delivery services, overnight services such as FEDEX, UPS, DHL and now Amazon and – soon – drone delivery.

AI tools will become increasingly capable general-purpose assistants. We will need them to keep audit trails so we can find out what, if anything, has gone wrong and how and also to understand more fully how they work when they produce useful results.

“By analogy, many of the things we might have done ourselves will be done by AI agents at our request. This could range from writing a program or a poem to ordering plane or theatre tickets. Multimodal AI services already translate languages, render text-to-speech and speech-to-text, draw pictures or compose music or essays on demand and prepare business plans on request.

“It will be commonplace in 2035 to have local bio-sensors (watch, smartphone accessories, Internet of Things devices) to capture medical symptoms and conditions for remote, AI-based diagnosis and possibly even recommended treatment.

“AI agents are already being used to generate ideas, respond to questions and write speeches and essays. They are used to summarize long reports and to generate longer ones (!).

“AI tools will become increasingly capable general-purpose assistants. We will need them to keep audit trails so we can find out what, if anything, has gone wrong and how and also to understand more fully how they work when they produce useful results. It would not surprise me to find that the use of AI-based products will induce liabilities, liability insurance and regulations regarding safety by 2035 or sooner.”

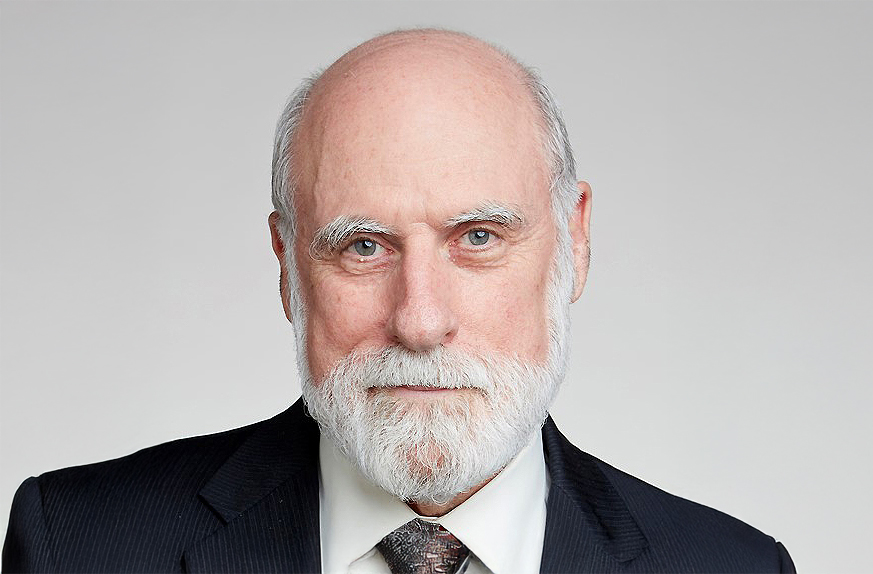

This essay was written in January 2025 in reply to the question: Over the next decade, what is likely to be the impact of AI advances on the experience of being human? How might the expanding interactions between humans and AI affect what many people view today as ‘core human traits and behaviors’? This and nearly 200 additional essay responses are included in the 2025 report “Being Human in 2035.”